Roundup: #text2data - new ways of reading

By Daniela Duca, Product Manager, SAGE Ocean

‘From text to data - new ways of reading’ was a 2-day event organised by the National Library of Sweden, the National Archives and Swe-Clarin. The conference brought together librarians, digital collection curators, and scholars in digital humanities and computational social science to talk about the tools and challenges involved in large scale text collection and analysis. We learned a lot from each of the eight presentations!

The conference kicks off in the auditorium of the National Library of Sweden

Digital Humanities - or humanities computing - has been around for more than 30 years. Finn Arne Jørgensen, Professor of Environmental History at the University of Stavanger, argued that the humanities went digital even before modern computers were developed. Finn took us back in time to Father Roberto Busa, a jesuit priest in Italy, who in 1949 and through sheer determination managed to ‘digitise’ his 13 million word corpus into a series of punch-cards. It was an impossible project, as attested by Thomas Watson at IBM - the company that ended up funding it, simply because it was interesting and rather futuristic.

Today, many researchers are still struggling to approach large amounts of text without reading every word. Jussi Karlgren, Adjunct Professor of Language Technology at Stockholm’s Royal Institute of Technology, and founder of text analysis company Gavagai, maintained that the way we engage with text is changing. We are now looking for patterns, not for the perfect document. Rather than engaging closely with the source material, we now use tools for machine-reading.

We cannot assume, however, that the approaches that work for manual reading will be effective for machine reading. More work needs to be done in mapping the questions asked by digital humanists to the tools being developed by computer scientists.

Jussi concluded that tools will come about if they are known to be needed. In other words, a researcher will seek out appropriate tools only once she is clear of her goals and what she wants to do with the corpus. It is critical at this stage to reach out to others instead of developing a tool from scratch, since there are so many already out there that can be reused and adapted.

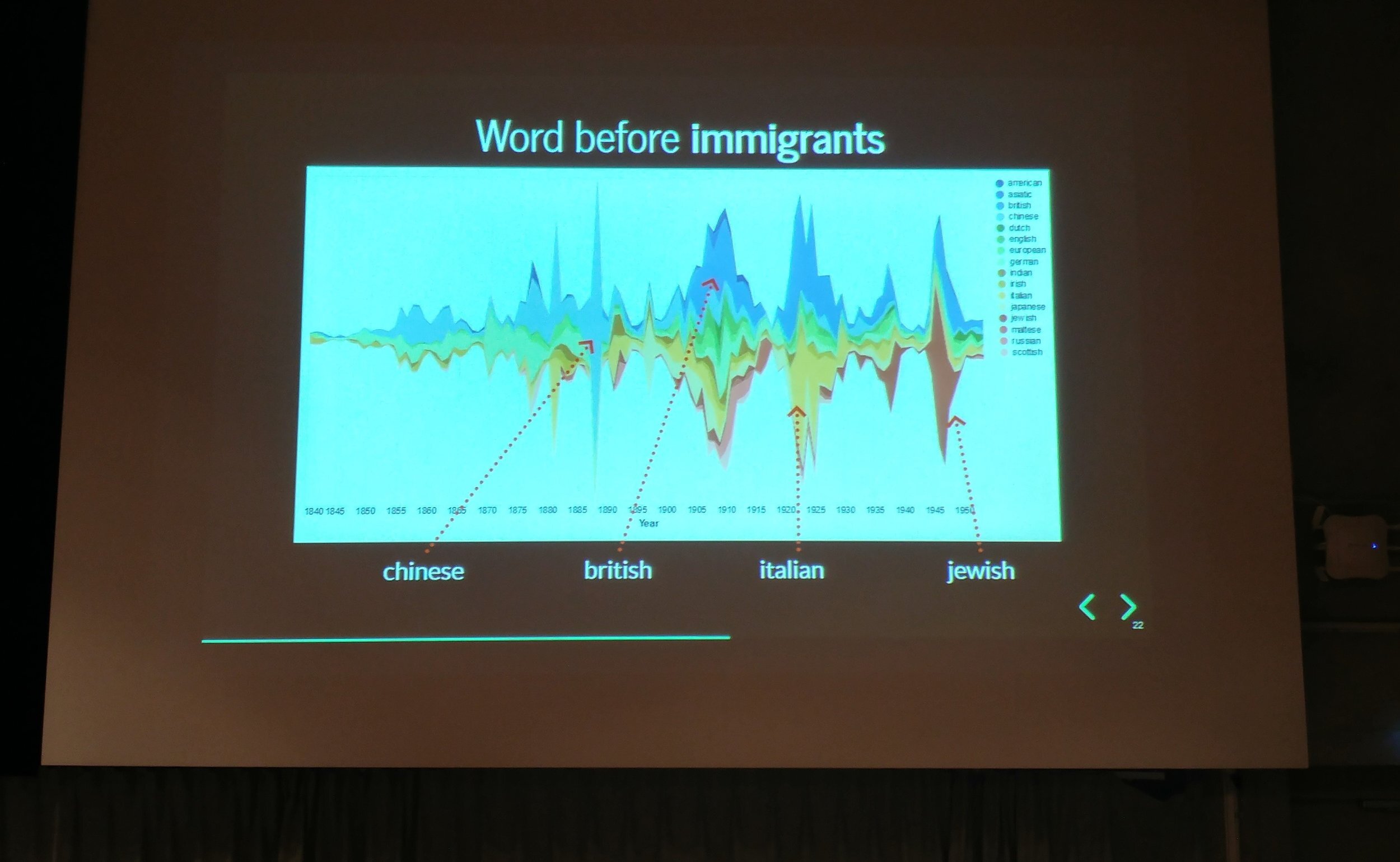

Tim Sheratt’s presentation on analyzing changes in the language of belonging

Continuing the discussion on tools, Melissa Terras, Professor of Digital Cultural Heritage at the University of Edinburgh, spoke about the Transcribe Bentham project and the OCR tool (Transkribus) that she and her team developed over 10 years. Melissa raised some pertinent questions around tools that are developed using public funding: What happens after the money runs out, and how can we find valuable operational models that keep the tools running whilst also allowing researchers to use them for free?

Lotte Wilms, Digital Scholarship Advisor at The Hague’s KB Lab, provided a library’s perspective on building tools to enhance the value of their collections. Whilst libraries are working successfully with researchers to develop tools for automatic text classification, they are still struggling to transition these tools into the national infrastructures (such as https://delpher.nl, the platform hosting all digitised material in the Netherlands).

Wout Dillen, coordinator for the Belgian section of the European infrastructure for the humanities CLARIAH-VL at the University of Antwerp, attempted the impossible - to discuss potential ways of building a cross-institutional research infrastructure using short-term funding. Having struggled to achieve this within the CLARIAH framework, Wout recommended a new approach, whereby memory institutions partner with universities to sustain funding and researcher exchanges.

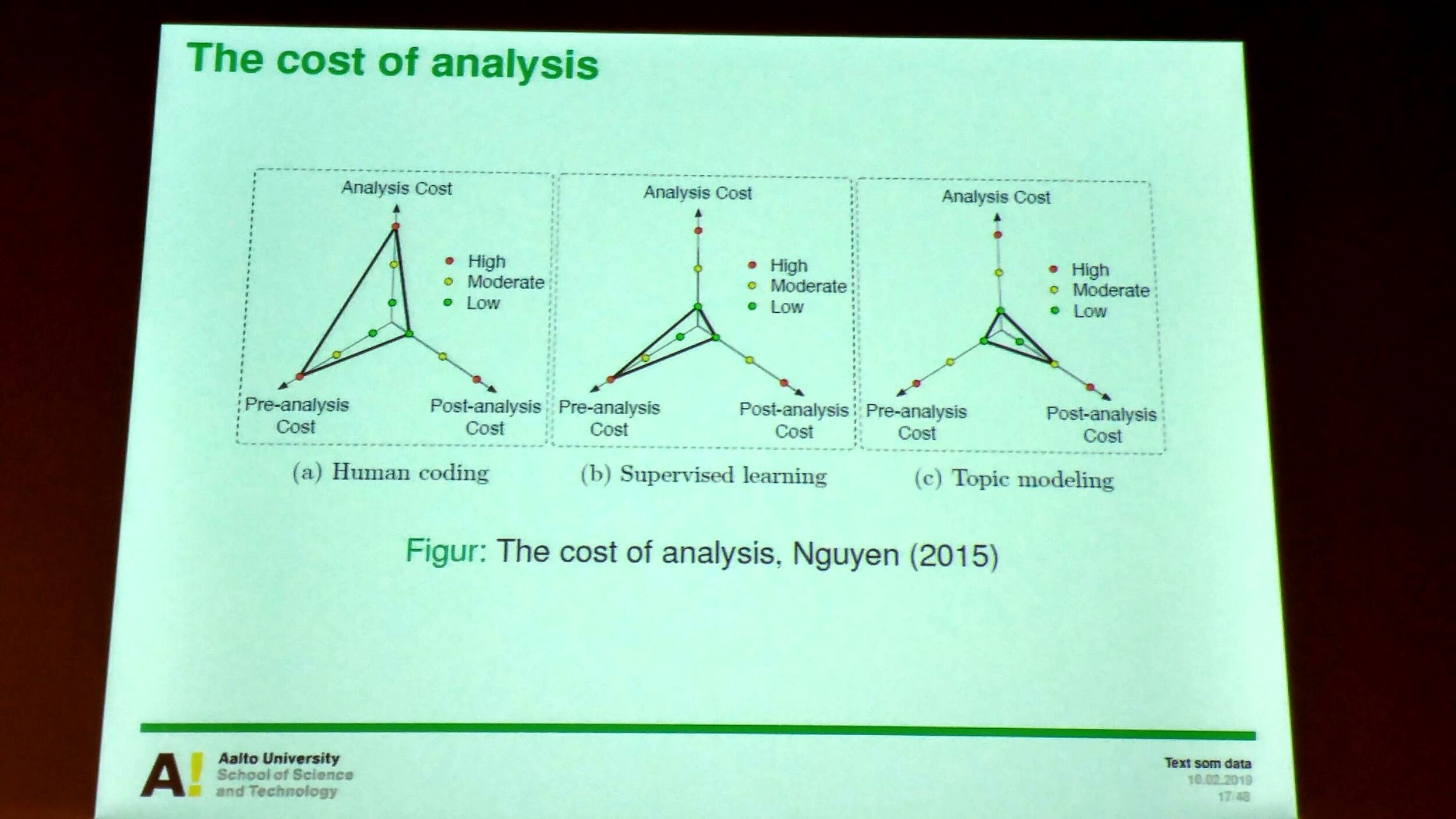

Måns Magnusson presents his work in probabilistic text analysis

What struck me most was the similarity between the digital humanists and the computational social scientists in the tools, methodologies and approaches used for working with large scale collections. This was particularly evident in two ot the presentations. Tim Sheratt, Associate Professor of Digital Heritage at the University of Canberra, showed us how he automated text analysis to read through 200 million newspaper articles to understand changes in the language of belonging (i.e. how ‘alien’ and ‘immigrant’ type concepts are used and perceived).

Måns Magnusson, a researcher in the Department of Computer Science at Aalto University, walked us through his use of tools and programming skills to analyse text probabilistically. Regarding the use of tools, he was in agreement with Jussi: we should be improving the tools already available for our research problem, and combining multiple building blocks from these existing tools. Måns’s key recommendation was to always criticise the model you develop, and continue to improve it until you are sure to have removed as much bias or error as possible.

I want to leave you with a few of Simon Tanner’s (Professor of Digital Cultural Heritage at King's College London) very actionable items to improve the use and reuse of large text data, which were a running theme across the presentations:

Improve citation of newspapers and other digitised materials.

Use short, and stable, and easy to cite URLs for digitised materials.

Improve OCR accuracy and forget about word significance.

Open up more collections and make the APIs especially open.

About

As Product Manager, Daniela works on new products within SAGE Ocean, collaborating with startups to help them bring their tools to market. Before joining SAGE, she worked with student and researcher-led teams that developed new software tools and services, providing business planning and market development guidance and support. She designed and ran a 2-year program offering innovation grants for researchers working with publishers on new software services to support the management of research data. She is also a visual artist, with experience in financial technology and has a PhD in innovation management.

You can connect with Daniela on Twitter.